I didn’t plan my homelab VCF deployment well enough. I only have 3 hosts available, but using some popular tricks, I was able do deploy a full management domain on it. At the deployment phase I blindly followed the workbook and deployed an NSX Cluster with all 3 nodes.

It worked well, but then I realized how much resources it takes – if I ever had to use this lab for more than just management VMs, I needed to cut the overhead. I decided to remove two of three NSX Manager nodes, leaving one-node cluster.

Removing NSX Manager nodes is pretty straightforward. I used the tips from this article by Ronaldpj.

This is a very bad idea for production environment, but was actually pretty good idea to free up some resources in my lab. After deleting those 2 nodes, the NSX remained functional, and I got back around 30GB of RAM.

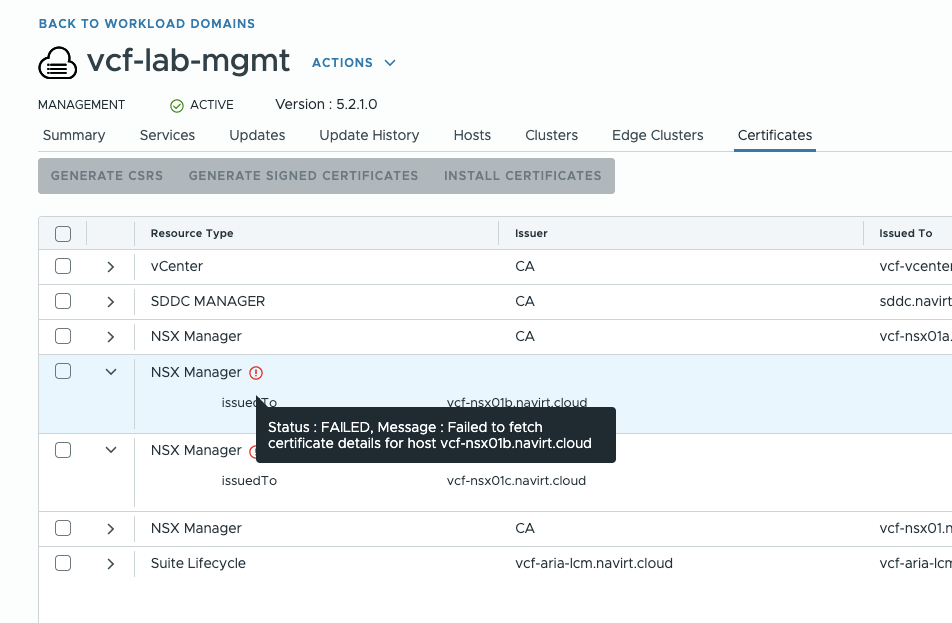

However, due to the fact that everything has been deployed by SDDC Manager, not everything went clean here. In the Certificates tab, SDDC Manager still tried to reach those 2 missing nodes.

My inner monk could not leave it unresolved.

There is no official and supported way to remove those resources from the SDDC Manager’s inventory. So I went the brute-force way – tampering with postgres database.

NEVER TRY THIS IN PRODUCTION!

Before messing with SDDC Manager, I obviously made a snapshot.

To access the database, I SSHd to SDDC manager using vcf account:

ssh vcf@sddc.navirt.cloudThen escalated to root:

suTo access the database, typed:

psql -h localhost -U postgresand connected to the platform database:

\c platformFor better readability, used:

\xIt took me a while to localize the right entries. The NSX Manger configuration can be found in the nsxt table.

\d nsxtIt looks like this:

Table "public.nsxt"

Column | Type | Collation | Nullable | Default

----------------------+------------------------+-----------+----------+---------

id | character varying(255) | | not null |

creation_time | bigint | | not null |

modification_time | bigint | | not null |

status | character varying(255) | | |

version | character varying(255) | | |

cluster_ip_address | character varying(255) | | |

cluster_fqdn | character varying(255) | | |

is_shared | boolean | | |

nsxt_cluster_details | text | | |

configuration | text | | |

Indexes:

"nsxt_pkey" PRIMARY KEY, btree (id)

Let’s take a look at the contents of nsxt_cluster_details:

SELECT id, nsxt_cluster_details FROM nsxt;The output is a JSON array, which includes all NSX Manager nodes with its configuration:

[{"fqdn":"vcf-nsx01a.navirt.cloud","vmName":"vcf-nsx01a","ipAddress":"10.24.13.81","id":"0be2bdc6-ec4e-4149-b5af-839597471188"},{"fqdn":"vcf-nsx01b.navirt.cloud","vmName":"vcf-nsx01b","ipAddress":"10.24.13.82","id":"a7e36256-8a52-4ee5-9373-215fd3d7542b"},{"fqdn":"vcf-nsx01c.navirt.cloud","vmName":"vcf-nsx01c","ipAddress":"10.24.13.83","id":"e8c645d9-d84f-4430-8ff8-6b8de2f0f550"}]So, at this point, I had some changes to make. For simplicity, I copied the array to my text editor and removed node b and c. Then, it was time to update the table:

UPDATE nsxt

SET nsxt_cluster_details = '[{"fqdn":"vcf-nsx01a.navirt.cloud","vmName":"vcf-nsx01a","ipAddress":"10.24.13.81","id":"0be2bdc6-ec4e-4149-b5af-839597471188"}]'

WHERE id = '22ae0975-d056-453f-83ae-7213f77cd59a';Everything went correct, UPDATE 1 informed about it.

The last thing to do was to restart services:

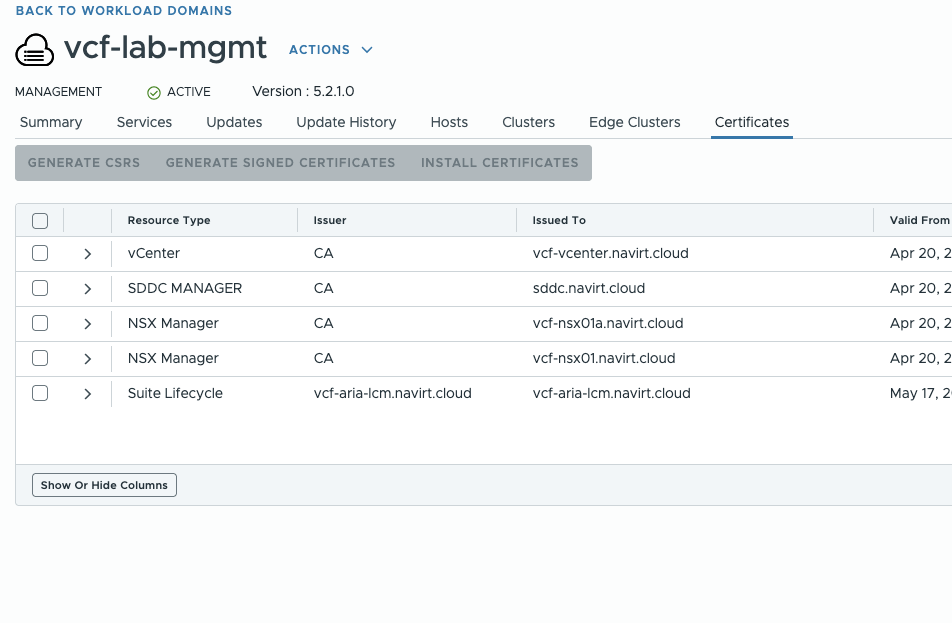

systemctl restart domainmanagerNavigating back to the SDDC Manager UI to check the result and… voila!

Dirty entries are gone, everything is clean. My mind is calm now.

A little disclaimer at the end:

I have no idea if there will be any repercussions of this change. I will keep an eye on it and post an update if I experience any issues.

Did I miss anything important? Let me know!

Leave a Reply